Graph Sketch: AI-Integrated Spatial Mind-Mapping Tool for XR

I created an application demonstrating two different methods of working with node graphs in XR - an intuitive sketch-based interaction method, and an AI assistant that can create, modify and connect nodes at the user's direction.

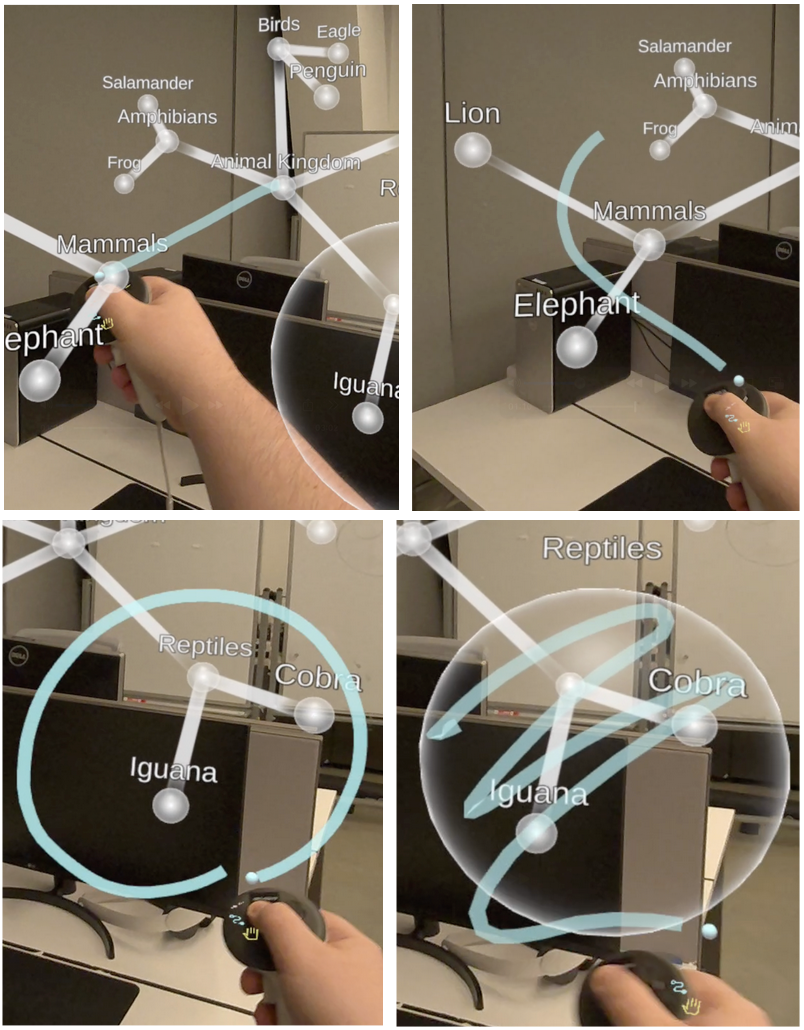

Users can draw 3D lines in the scene, and any nodes that appear to be on the line or within a drawn shape from the user’s perspective are selected. Depending on the type of shape that was drawn (line, bubble, scribble), different actions are carried out, enabling multiple functions to be contained within a single user input.

The user can additionally communicate verbally with an AI assistant to modify or add to the 3D scene. Several guardrails were implemented - including detailed environmental context, prompt engineering, and expert algorithms - to ensure that the AI's actions in the virtual environment match the user’s intent.

The sketch-based interaction methods are an extension of LassoSelect, a previous research project further explained here.

To learn more, read the extended abstract.

Cornell Tech

Independent Research

Spring 2025

Different sketch shapes result in different outcomes. Draw lines to connect nodes, or split them; draw loops to create encompassing bubbles, or scribble over a bubble to remove it.

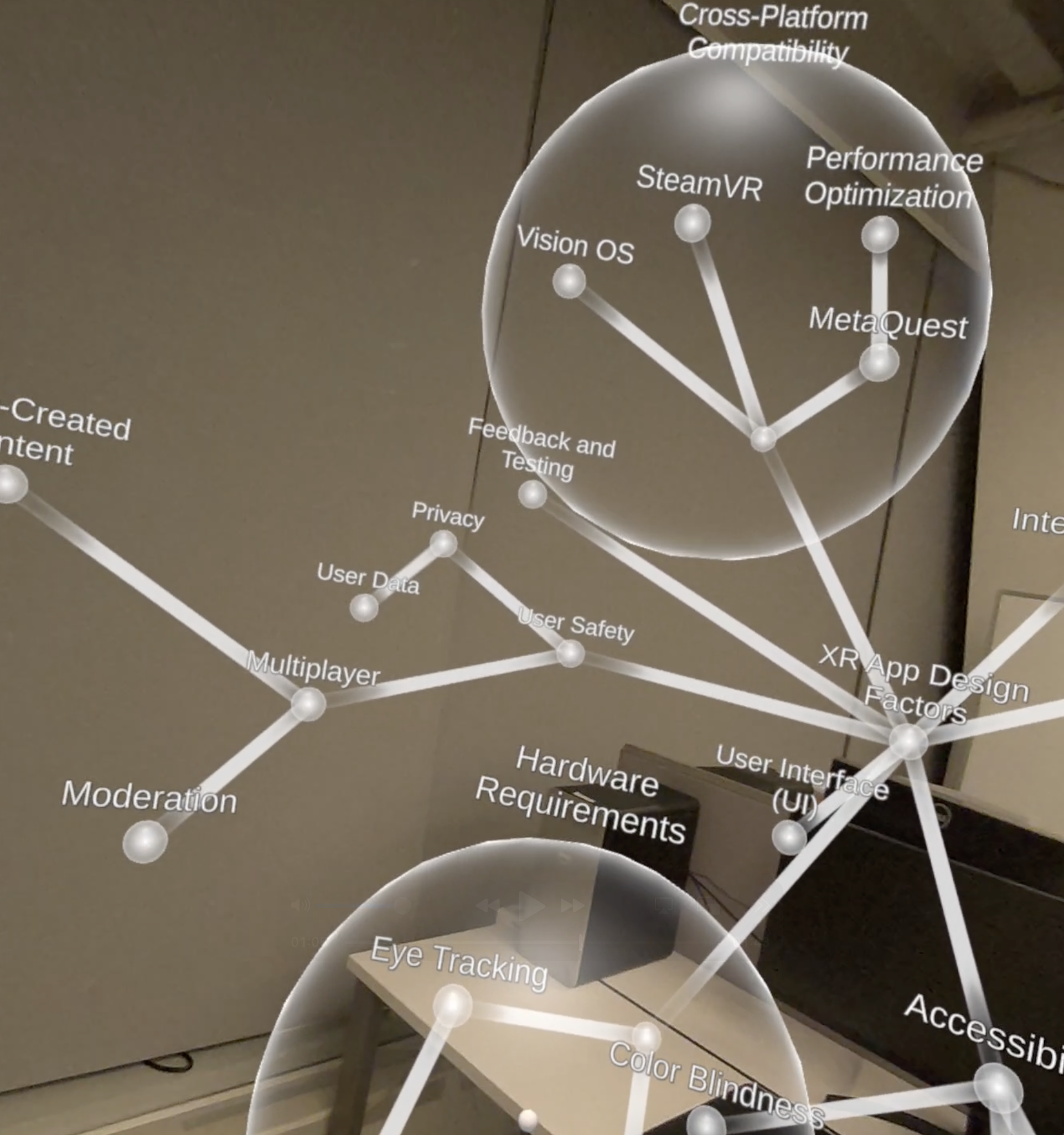

With an AI partner, complex node graphs can be constructed quickly using voice commands.

“Gaze+Pinch" - using eye tracking to select the object you look at when you pinch - is a popular selection method in XR. It requires minimal hand movement, reducing arm strain and making it ideal for crowded spaces or public transportation. However, manipulating grabbed objects can still require significant hand movement after pinching, mitigating the benefits. While techniques such as transfer functions can improve manipulation, depth in particular is very difficult to control using small movements.

I created two control mechanisms using microgestures - secondary finger actions separate from the primary hand action - to extend and enhance depth control while pinching. These techniques working in tandem with transfer functions enable objects to be placed anywhere in the environment while barely moving one’s hands.

To learn more, read the extended abstract.

Enhancing Gaze+Pinch Depth Control using Microgestures

Cornell Tech

Independent Research

Fall 2024

A visualization of the two demonstrated control mechanisms: “pinch movement” (moving the location of the pinch closer or further from the palm) and “index scrolling” (scrolling the middle finger along the top of the index finger).

In the demonstration scene, the user uses microgestures to grab and move cubes into far-away target spheres with minimal hand movement.

Asymmetric Remote Collaboration using Tangible Interaction in AR

Object tracking - the ability to track physical objects in your environment and attach digital content to them - enables tangible interaction methods that allow users to control the digital environment in a very intuitive and natural way. However, problems arise when using tangible interaction for remote collaboration, as two remote users cannot control the same physical object.

In this project, I explored the potential for tangible asymmetric collaboration - scenarios where there is a “local user” that controls the tangible object, and a “remote user” that cannot move the object but can see a visual representation and provide directions. The project is coded entirely in Swift, and is networked for remote collaboration.

Asymmetric tangible collaboration has potential for physical rehabilitation, design visualization, and board games. In a game of chess for instance, each player could move physical versions of their pieces, while seeing digital visualizations of the other player’s pieces.

To learn more, read the extended abstract.

Cornell Tech

Independent Research

Fall 2024

During remote collaboration, users see each other’s digital avatar, alongside a digital visualization of the box that the other user is holding.

The physical object is tracked and brought into the digital environment. A red hologram is created as a target for the user to move the digital object towards; the target turns green and disappears upon being reached.

Multi-Object Image Plane Lasso Selection

Over the course of a semester, I developed a multi-object image plane lasso selection technique as a Unity package for use in VR applications.

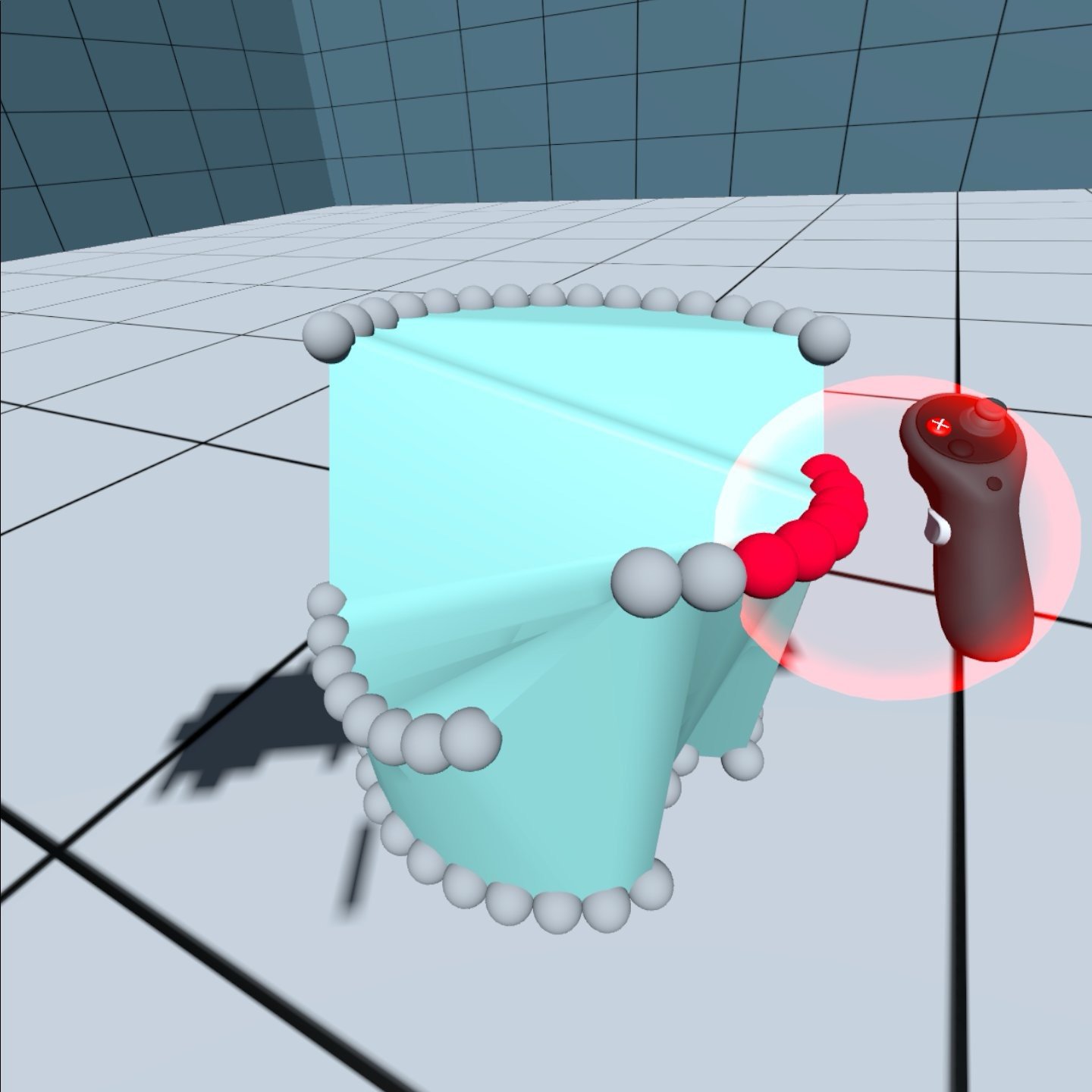

Users of this technique can draw a three-dimensional “lasso” in the virtual environment, and any objects that appear to be within that lasso from the user’s perspective will be selected. Basing selection on the user’s perspective minimizes selection errors while allowing selection at any distance, and drawing is a simple and intuitive method to quickly define a selection area. Users can also draw a line without completing a full loop, and anything that lies along the line from the user’s perspective will be selected.

I coded scripts that enable the user to draw a lasso or line through the scene, and will then project selection rays from the user that pass through the lasso and into the scene. It was designed to be compatible with other packages and easily integrated into any XRI scene with minimal effort.

To learn more, read the extended abstract and package documentation.

Cornell Tech

Independent Research

Spring 2024

A demonstration of the lasso selection technique, where the user has drawn a complex lasso that selects specific objects (highlighted in yellow) while avoiding others.

This debug tool visualizes the raycasting technique used in selection. Rays originate at the user’s eyes and pass through the lasso (yellow). Rays that hit an object (red) select that object; other rays pass without hitting anything (orange).

Over the course of a two-week full-time research position, I developed a radial marking menu as a Unity package for use in VR applications. The menu is fully customizable by the user.

Navigation and selection is simple and intuitive; holding down the menu button opens the menu, and you can move your controller to select an option. Hovering over a submenu (marked with an outwards arrow) will take you to that submenu. Releasing the menu button while hovering over an element activates that element, triggering its corresponding Unity event. The activation of functions through a single connected movement allows for the development of muscle memory, allowing for users to access tools and options without thinking.

Users can create menu items with icons or labels that trigger public functions in the Unity scene, as well as create nested submenus to organize them. This allows the marking menu package to be used for practically any purpose.

In order to allow the UI to seamlessly accomodate user settings and change on-the-fly, I programmed a custom shader that renders the back of the UI entirely through code. It modifies the number of sections, which sections are submenus, and the size of the menu all by changing the parameters attached to the shader.

To learn more, read the package documentation.

Radial Marking Menu for VR

Cornell Tech

XR Collaboratory Research

Winter 2023

A video demonstration of the package. Primitive shapes (both in the background & attached to the tool) are used to demonstrate the menu’s functionality; selecting a shape in the menu triggers functions in the scene that will make the corresponding shape in the background teleport, and will also attach that shape to the controller.

A demonstration of the submenu system; hovering over “More Options” (right) will lead you to the left menu, while hovering over “Back” (left) will take you back to the right menu.

The package has an optional “expert mode” where the menu will start out hidden (left) before expanding to show all icons after a short amount of time (right). This encourages development of muscle memory by discouraging reliance on reading icons, and allows for expert users to navigate & activate functions without ever opening the full menu, reducing distractions.

For our final project in INFO5340: Virtual and Augmented Reality, I worked in a team of 4 to create a 3D modeling app in Unity for the Quest 2. We were tasked with creating a modeling app that replicated features and interaction techniques from Gravity Sketch, a popular VR app. Starting from Unity’s basic XR Interaction Toolkit, we implemented a swath of features enabling complex navigation, selection and manipulation of objects.

As the only member of our team with previous Unity experience, I took a leadership role in the team, delegating tasks and helping my team learn how to use the program more effectively. I also assisted with debugging & troubleshooting throughout our project.

My other responsibilities included:

Implementing a 3D color visualizer to change object colors

Implementing undo/redo using a command-based system

Implementing an input action controller & state manager

Using Blender to design and model custom assets when needed

Making numerous design/UX improvements throughout all of our app’s features

Our work was chosen as one of the top 2 projects from the class to be showcased in Cornell Tech’s open studio event.

This information can also be found on our project page.

Creative Tools for VR

Cornell Tech

INFO5340: Virtual and Augmented Reality

Fall 2023

Our feature walkthrough, where a team member describes and demonstrates all of the features we implemented.

Highlighted Features

Users can rotate the thumbstick to unwind time, traversing the scene’s edit history quickly and intuitively.

In mesh editing mode, users can grab vertices to edit primitive objects and create more complex meshes.

Users can easily change the color of grabbed objects by moving their controller within a 3D color visualizer.

In scaling mode, users can grab constrained axes to quickly adjust the size of objects.